In today's fast-paced technological landscape, the ability to harness the power of GPUs for general-purpose computing is more crucial than ever. This article serves as a comprehensive guide to "CUDA by Example," a seminal work that introduces readers to the world of general-purpose GPU programming. With the rise of parallel computing, understanding how to effectively utilize CUDA (Compute Unified Device Architecture) can significantly enhance the performance of computationally intensive applications.

CUDA by Example provides a clear pathway for programmers to transition from traditional CPU programming to leveraging the vast parallel processing capabilities of GPUs. This transition is not just beneficial for high-performance computing but also opens up new possibilities in various fields such as machine learning, scientific computing, and real-time graphics rendering.

Whether you're a seasoned developer or a novice programmer, this article aims to equip you with the foundational knowledge necessary to embark on your journey into GPU programming. We will explore the key concepts, practical applications, and resources available to help you master CUDA.

Table of Contents

- What is CUDA?

- History of CUDA

- Getting Started with CUDA

- CUDA Programming Model

- Examples in CUDA

- Applications of CUDA

- Best Practices in CUDA Programming

- Resources and Further Reading

What is CUDA?

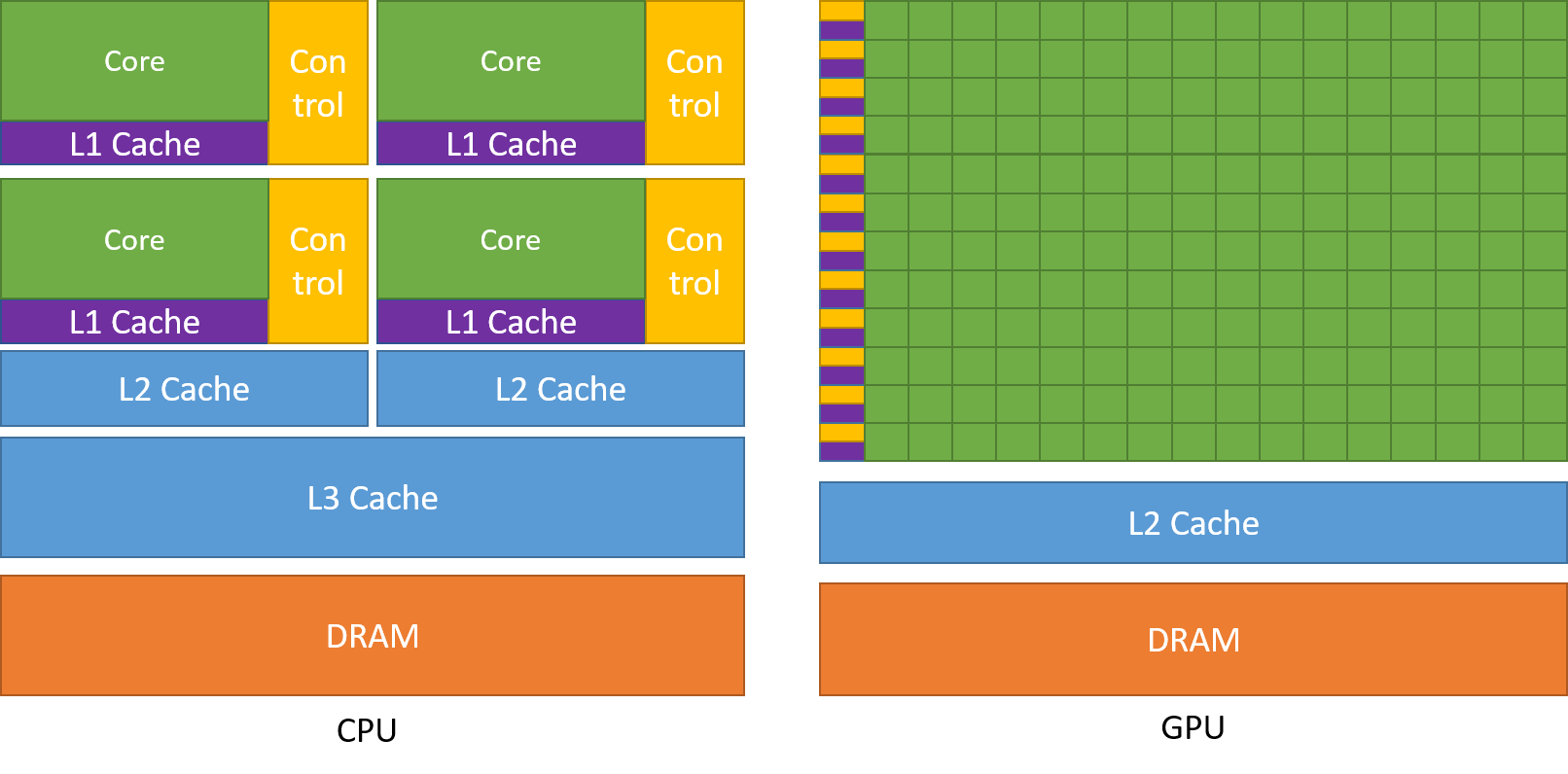

CUDA, or Compute Unified Device Architecture, is a parallel computing platform and programming model developed by NVIDIA. It allows developers to use a CUDA-enabled graphics processing unit (GPU) for general-purpose processing—an approach known as GPGPU (General-Purpose computing on Graphics Processing Units). CUDA provides a straightforward API for programming GPUs, enabling programmers to write software that can execute thousands of threads simultaneously.

Key Features of CUDA

- Parallel computing capabilities that enable high-performance applications.

- Support for C, C++, and Fortran, allowing integration with existing codebases.

- Extensive libraries and tools to facilitate GPU programming.

- Access to GPU resources for improved computational efficiency.

History of CUDA

CUDA was first introduced in 2006 by NVIDIA as a way to leverage their GPU technology for general-purpose computing. The initial release allowed developers to write code that could run on the GPU without needing to learn a new programming language. This innovation opened the doors for numerous applications across various fields.

Milestones in CUDA Development

- 2006: Introduction of CUDA with support for C programming.

- 2008: Release of CUDA 2.0, introducing support for multiple GPU architectures.

- 2010: CUDA 3.0 launched, featuring new libraries and improved performance.

- 2020: CUDA 11 released, enhancing AI and machine learning capabilities.

Getting Started with CUDA

To begin your journey with CUDA programming, you'll need to set up a suitable environment. Here are the steps to get started:

Prerequisites for CUDA Programming

- A CUDA-enabled GPU (Check NVIDIA's website for compatibility).

- CUDA Toolkit installed on your system.

- Familiarity with C/C++ programming language.

Installing the CUDA Toolkit

Downloading and installing the CUDA Toolkit is essential. You can find the latest version on the NVIDIA developer website. Follow the installation instructions for your operating system, and ensure that you have the necessary drivers installed for your GPU.

CUDA Programming Model

The CUDA programming model consists of a set of extensions to the C programming language, along with a runtime library. Understanding the basic components of this model is crucial for effective programming.

Kernel Functions

In CUDA, a kernel is a function that runs on the GPU and is executed by multiple threads in parallel. You define a kernel function in your code and then call it from the host (CPU) with a specified number of threads.

Memory Management in CUDA

CUDA provides different types of memory for efficient data management:

- Global Memory: Accessible by all threads but has high latency.

- Shared Memory: Faster access but limited in size and shared among threads in a block.

- Local Memory: Private to each thread and used for automatic variables.

Examples in CUDA

One of the best ways to learn CUDA is by examining practical examples. Here are a few illustrative cases.

Simple Vector Addition

This classic example demonstrates how to perform vector addition using CUDA:

__global__ void vectorAdd(float *A, float *B, float *C, int N) { int i = threadIdx.x; if (i < N) C[i] = A[i] + B[i]; } Matrix Multiplication

Matrix multiplication is a more complex example that showcases CUDA's power:

__global__ void matrixMul(int *A, int *B, int *C, int N) { int row = blockIdx.y * blockDim.y + threadIdx.y; int col = blockIdx.x * blockDim.x + threadIdx.x; int sum = 0; for (int k = 0; k < N; ++k) { sum += A[row * N + k] * B[k * N + col]; } C[row * N + col] = sum; } Applications of CUDA

CUDA has a wide range of applications across different domains. Here are some notable examples:

Scientific Computing

Many scientific simulations, such as fluid dynamics and molecular modeling, benefit from the parallel processing capabilities of CUDA. Researchers can run complex simulations much faster than traditional CPU methods.

Machine Learning and AI

CUDA is heavily utilized in training neural networks and other machine learning algorithms. Frameworks like TensorFlow and PyTorch leverage CUDA to optimize their performance on GPUs.

Best Practices in CUDA Programming

To maximize your performance when programming with CUDA, consider these best practices:

- Minimize data transfer between host and device to reduce latency.

- Utilize shared memory to speed up data access among threads.

- Optimize kernel launches by adjusting block and grid sizes.

- Profile your code to identify bottlenecks and optimize accordingly.

Resources and Further Reading

To further enhance your knowledge of CUDA programming, consider the following resources:

Conclusion

In conclusion, "CUDA by Example" serves as an invaluable resource for anyone looking to delve into general-purpose GPU programming. By mastering CUDA, developers can unlock new levels of performance and efficiency in their applications. We encourage you to explore the resources provided and engage with the vibrant CUDA community. If you have any questions or experiences to share, please leave a comment below or share this article with others interested in GPU programming.

Final Thoughts

Thank you for taking the time to explore the world of CUDA with us. We hope you found this article informative and inspiring. Be sure to visit our site again for more insights and resources on advanced programming topics.